For teachers, the desire to adopt educational apps in their classrooms to improve student learning is challenged by the increasing number of available apps and by the difficulty of judging their effectiveness. For school leaders, questions surround weighing the cost-benefit of the financial and administrative burden required to adopt new apps vs. the return on investment to student learning. In a world of many choices and limited review time, what influences teacher and principal decisions?

To address these questions, the authors use two survey experiments to examine whether an app’s adoption is affected by two factors: popularity and efficacy.

The first experiment was conducted online from April to June 2024 with teachers from 11 school districts serving about 161,000 preschool and elementary students. The final sample included 1,104 teachers, including 289 pre-k teachers, 206 kindergarten teachers, and about 150 teachers from each of grades 1-4. The experiment was part of a 10-minute survey completed by teachers, which also asked about their familiarity with, and barriers to, recommending digital math games.

The authors use a within-subjects design: an experimental design wherein each participant is exposed to all levels of the independent variable (also called treatments or conditions). Essentially, every participant serves as their own control, allowing for direct comparison of their responses across different conditions. where teachers view short descriptions of fictional math learning apps and then rate how likely they are to recommend the app to students for home use. The four app descriptions are followed by either 1) a statement about research evidence showing the app’s effectiveness, 2) a statement that the app is popular among teachers, 3) both statements on efficacy and popularity, or 4) no additional information. The authors find the following:

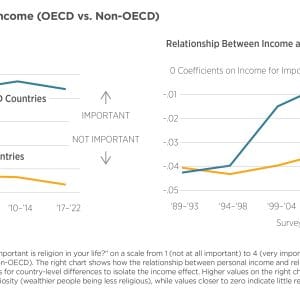

- The likelihood of a teacher recommending a math app increases by 0.24 standard deviations: a statistical measure of the dispersion or variability of a set of data points from the mean, representing the average distance of each data point from the mean. (SD) when they are informed that there is research evidence that the app improves math skills, and by 0.21 SD when teachers are told that the app is popular among educators.

- Telling teachers about both the app’s popularity and its efficacy results in a 0.30 SD increase.

- The treatment effect on pre-k teachers is half as strong as k-4 teachers, which could indicate that preschool teachers’ opinions are less easily swayed than those of elementary school teachers. There may also be evidence of the “bandwagon” effect, as teachers are equally influenced by peer popularity and research evidence.

In the second experiment, also conducted online between April and June 2024, pre-k school leaders were randomly assigned to either watch an informational video on research evidence supporting a digital game or view a control video describing analog learning materials without discussing research evidence. After the video, pre-K leaders were asked about 1) their likelihood of endorsing a digital math game among other learning resources for pre-k students to use at home, from “Very Unlikely” to “Very Likely,” and 2) their willingness to pay for a digital math game for each pre-K student using school funds, on a scale of $0-$10. The authors find the following:

- There is no significant effect on the likelihood of recommending digital apps, and a marginally significant increase in their willingness to spend more on digital apps from their school budgets.

- While these results might suggest that school leaders are not easily influenceable, the authors caution that this may reflect the experiment’s methodology, and that a larger sample size may reveal effects resembling those in the teacher survey experiment.

This paper is part of an extensive literature on what influences decision making in various contexts, and more research is necessary to confidently suggest policy outcomes. That said, this study reveals that teachers are influenced by research evidence, which highlights the importance of rigorously evaluating ed-tech products.