The second meeting of the Macro Financial Modeling (MFM) Working Group gathered 55 scholars in New York for close review and discussion of research that examines financial sector shocks to the economy.

The goal, according to project co-director Lars Peter Hansen, was “to think hard and critically about incorporating financial frictions into macroeconomic models and identify productive avenues for future exploration.”

Empirical Evidence Connecting Financial Markets and Macroeconomic Performance

Some work suggests that credit spreads are predictive of economic distress, because they reflect disruption in credit supplies, either through the quality of borrowers’ balance sheets or deterioration in the health of financial intermediaries. Simon Gilchrist of Boston University examined the evidence relating credit spreads to economic activity. In work with Egon Zakrajšek, he constructed a credit spread and broke it down into components to identify the source of predictive power. They identified a component they called the excess bond premium (EBP) that represents the price of default risk. The EBP offered large gains in power to predict GDP growth, payroll, and unemployment, at least over medium-term horizons.

Mark Watson of Princeton presented related work that addressed two questions: How was this recent Great Recession qualitatively different from others? What were the important shocks that contributed to it? Watson summarized efforts to use a “dynamic factor model” fitted to a large cross section of time-series data to find answers to these questions. He found that financial and uncertainty shocks were the main cause.

Using a substantially different econometric approach, Frank Schorfheide of the University of Pennsylvania along with Marco del Negro used dynamic stochastic general equilibrium (DSGE) models to identify the “structural” shocks needed to explain macroeconomic time series data seen during the recent recession.

In discussion, Nina Boyarchenko of the Federal Reserve of New York and Nobuhiro Kiyotaki speculated on what economic models might best explain the time series evidence in ways that would enhance our interpretation of the evidence. Lars Peter Hansen, Christopher Sims, and others pushed for a better understanding of the “shocks” that were identified as drivers of the current recession.

Contingent Claims Analysis Applied to the Financial and Government Sectors

One reason traditional risk models do not adequately capture systemic risks is that they are based mostly on accounting flows and are therefore backward-looking, while risk assessment is inherently forward-looking. Contingent claims analysis (CCA), an approach presented by Dale Gray of the International Monetary Fund, addresses this weakness by incorporating liabilities and future obligations using market data with the associated adjustments for risk.

In discussion, participants expressed support for the basic idea but stressed the need for a better understanding and assessment of the inputs into this analysis. They also wondered how far such an approach can go to conduct policy analysis without a better understanding of the determinants of risk prices.

Comparing Systemic Risk Measures

With no fewer than 31 methods proposed to measure systemic risk, it’s unclear which methods work best under what circumstances and why. Much of Friday’s discussion focused on that question.

Bryan Kelly of the University of Chicago Booth School of Business presented work inspired by the first MFM meeting that attempted to understand how the many different models for analyzing systemic risk might work collectively. With Stefano Giglio and Xiao Qiao, he compiled more than 20 available measures and applied them to data going back as far as 1926. “The only thing that is clear is that they all jump in 2008, during the financial crisis, but that’s what they were constructed to look at,” Kelly said.

Kelly categorized and then compared the various models by the key factor they analyze: volatility, comovement, contagion, and illiquidity. His team found that covariation and volatility were driving the most highly correlated measures. They used partial multiple quantile regression to examine whether there was information in the collective measures that couldn’t be obtained through any single measure. In discussion, several participants noted that there are different causes for macroeconomic disturbances, and they require different methods for accurate analysis.

Future work in this area might expand the analysis from asset prices to also include commodities and currency fluctuations and to formally split some of the measures into groups depending on the more precise aims of the measures.

Kelly and MFM project codirector Andrew Lo of MIT both pointed out that the lack of a clear definition of systemic risk explains the profusion of different approaches to its measurement. They both referenced a recent essay by Lars Hansen, “Challenges in Identifying and Measuring Systemic Risk,”as a valuable overview on this point.

Lo and graduate student Amy Zhou ran a comparison of the 31 measures to see which best predicted financial events generally viewed as systemic. Measures like marginal expected shortfall were fairly predictive, but evidence of stress in hedge funds was more strongly correlated. Consumer credit markets also show early signs of a future credit crunch.

One participant pointed out that with 31 systemic risk measures proposed and thousands of papers published so far, “We should all pull together on this. There is plenty of work to go around.”

One specific model where there is an opportunity for expert macroeconomists’ input is the Complex Research Initiative for Systemic Instabilities (CRISIS), outlined by Doyne Farmer of Oxford University. Eleven groups from seven nations are working on an ambitious computer simulation of a system of heterogeneous interacting agents that could be used to perform conditional forecasts and policy analysis in any state of the economy.

Robert Engle of NYU described a macro-financial approach called SRISK that tries to pin down the macroeconomic impact of a crisis based on firms’ available capital. SRISK is a computed estimate of the amount of capital a financial institution would need to raise in order to function normally if a financial crisis occurs.

Constructed from a firm’s size, leverage ratio, and risk, the SRISK measure predicts econometrically how much equity would fall in a crisis and how much capital would need to be raised to compensate for the drop in valuation. In a financial crisis, all firms will need to raise capital simultaneously and credit will dry up, leaving taxpayers as the only source of capital. “The bigger the SRISK, the bigger the threat to macro stability is,” Engle noted.

Anil Kashyap of the University of Chicago Booth School of Business pointed out concerns with risk measures based chiefly on asset prices, which can be misleading. For example, CDS spreads gave little sign that Lehman and Bear Stearns were near collapse. Conversely, there are clear examples of equity prices collapsing, as in the 1987 market crash and the Long Term Capital Management episode, where there was no major effect on the macroeconomy, Kashyap noted.

In an executive session, the group determined priorities for future work and agreed to expand dissertation support.

Presentations

Thursday, September 13, 2012

What Do We Learn From Credit Market Evidence?

Because financial markets are forward-looking, asset prices embed expectations about future macroeconomic outcomes. However, extracting these expectations is complicated by the existence of time-varying risk premia.

Credit spreads are indicative because they reflect disruptions in credit supply, either through the worsening of borrower’s balance sheets or the deterioration in the health of financial intermediaries. Other research suggests that corporate bond prices may be a better signal of the future course of economic fundamentals than equity prices.

The authors propose a new indicator, the “GZ credit spread”, which aggregates information from individual corporate bond prices while controlling for the schedule of cash payments in each bond, the default risk of the issuer, and (for many securities) the value of the embedded call option. They decompose the spread into an expected-default component and a residual, and show that the latter (the “excess bond premium”, or EBP) has high predictive content for macroeconomic outcomes, especially during recessions.

The authors interpret the EBP as reflecting the price of default risk, rather than the risk of default itself. It therefore acts as a gauge of credit-supply conditions, capturing variation in the risk attitudes of credit suppliers. In fact, the EBP correlates very highly with broker-dealer CDS spreads before and during the Lehman event; however, unlike CDS spreads, the data used to calculate the EBP goes back much further in time.

A good in-sample fit is rarely indicative of true forecasting power. To address this issue, the authors include issuer-level credit-spread information organized into portfolios, as well as standard macroeconomic and asset-market indicators, in a large Bayesian Model Averaging exercise to determine which variables best forecast macroeconomic outcomes. They find large improvements in forecasting performance relative to an AR(2) model for all indicators except consumption. Moreover, removing the credit-spread variables from the analysis results in almost no improvement, or an even worse performance, relative to the AR(2) benchmark. Most of the posterior weight at the end of the sample is on credit-spread variables. In fact, the model begins to place high posterior weights on credit-spread variables in 2001. The authors interpret this as evidence that improvement in forecast performance from credit spreads occurs during recessions, rather than at all times unconditionally, because most of the information in the sample is in the 2001 and current recessions.

Discussion

David Backus cited earlier work by Stock & Watson showing that financial variables like credit spreads are not useful in forecasting macroeconomic events. Though he prefers the current results, he wonders what differentiates them. Is it just the sample period? He also presented some cross-correlation evidence on the lead-lag relationships with industrial production of various predictors that crudely mirror the “GZ credit

spread” and the EBP, including the term spread, excess equity returns, and the real risk-free rate, showing that many financial-market indicators have the same properties as credit spreads. Interestingly, bond returns appear to have the same lead-lag shape as equity returns; he interpreted this fact as evidence that a lot of what we see in financial markets reflects the business cycle. He also noted that there is some variation in these shapes across maturity and rating quality of the issuers, an interesting issue for future research.

Nina Boyarchenko presented a theoretical model she has developed with Tobias Adrian which suggests a mechanism through which an EBP shock could forecast future macroeconomic outcomes. In the model, intermediaries finance the capital rented to firms by borrowing from households. The balance-sheet dynamics of the intermediaries drive both credit spreads and economic activity. The model generates a negative correlation of investment to leverage shocks, which along with the positive risk price for leverage shocks implies that expected excess returns are increasing with a positive shock to leverage. These shocks can be generated by discount rate shocks to households, which worsen credit conditions, providing the link between leverage, credit conditions, and investment that we see in the data.

John Heaton questioned whether the standard distance-to-default measurement is appropriate, since it requires an assumption of time-invariant expected returns; the model might interpret an increased price of default risk as a higher expectation of default, invalidating the decomposition which defined the EBP, and thus its interpretation. Furthermore it’s not clear in these models whether we are using, or getting, risk-neutral or risk-natural probabilities. Andrew Lo commented on some recent work by Hui Chen using time-varying expected returns that can explain the credit-spread puzzle, which could be used in the current framework to sharpen the interpretation of the EBP.

Thomas Sargent and Christopher Sims pointed out that a formal Bayesian interpretation of the Bayesian Model Averaging procedure is not appropriate, because we don’t really believe in the linear form and Gaussian errors. Sims went on to note that models linking finance to macroeconomics result in 4-, 5-, or 6-standard- deviation shocks, inconsistent with Gaussian errors. He suggested, at a minimum, allowing the shock variance to change during recessions to address this issue.

Furthermore, Sims cautioned against interpreting these “financial stress” shocks as explaining other outcomes; rather, they are themselves in need of explanation. Anil Kashyap disagreed, arguing that the “financial plumbing” gets clogged. Surveying the Federal Reserve and Treasury programs during the crisis, he claimed that the most successful policies were those focused on the solvency problems of intermediaries, rather than those that presumed illiquidity in markets as the fundamental problem. Mark Gertler agreed with Kashyap, but interpreted Sims’ comment as pertaining to the difference between the initiating forces, which we do not understand very well, and the propagating forces, which relate to credit conditions and the financial accelerator.

Andrew Lo asked about whether there was more information to be extracted from these credit spreads than is apparent in their simple average, and suggested looking at the cross-sectional variation in spreads as well, which might help identify the difference between idiosyncratic and common shocks across the business cycle. He also proposed tying the EBP more closely to CDS spreads, not just of broker-dealers but across all sectors, which may be more timely indicators than bond prices.

Presenters

- Simon Gilchrist, Professor of Economics , New York University

- David Backus, the Heinz Riehl Professor, New York University’s Stern School of Business

- Nina Boyarchenko, Research Officer, Federal Reserve Bank of New York

Presentation Materials

What We Can Learn from Contingent Claim Analysis

This session addressed the limitations to understanding and studying systemic risk using traditional macro and banking models. A major reason for such limitations is that these models are often based on analysis of stocks, flows and accounting balance sheets and do not focus on risk exposures, which are forward looking. This traditional analysis cannot capture the non-linearities that we see.

To overcome these limitations the authors propose to use Contingent Claims Analysis (CCA), put forth by Robert Merton, at the macro level. CCA treats assets as random variables, and equity and liabilities as derivatives on such assets, with liabilities being put options and equities call options.

This approach is mainly used for corporations since it is natural to think about their balance sheets and the data on equity prices is readily available to estimate the value and volatility of the assets. The authors push CCA further and extend it to include other agents and institutions, in particular the government, banks, and households.

Each of these constituencies has a balance sheet, which can at least be described qualitatively, even though actual measurement may be challenging. The approach has several advantages. First, it is quite general as it allows for all types of contingent claims including implicit contingent claims such as implicit guarantees provided by the government to financial institutions (often referred to as implicit put options). Second, since the payoffs of contingent claims are non-linear, by using this approach, one can capture the non-linearities that we observe especially in times of crisis and which are not captured by the traditional balance sheet analysis. Third, the balance sheet approach allows study of the interconnections among different sectors and how risk is transmitted from one sector to another since the liabilities of one sector are the assets of other sectors. Finally, CCA analysis is robust since it does not depend on knowing expected returns or preferences.

As an example of how to extend the analysis, the authors have taken the analysis at the national level to study systemic risk across countries. They have several papers that illustrate how to apply the approach in that context.

According to the authors the approach is also useful for policy applications. The reason is that since CCA measures risk exposures, and we know where such exposures are in the balance sheets of different agents of the economy, actions to mitigate systemic risk by reducing such exposures are straightforward to implement.

Discussion

John Heaton raised two issues when implementing CCA analysis. The first one is the well-known concern that arises when one applies the Merton model to study default risk: the probabilities obtained are in the risk neutral measure and, consequently, one needs a model to go from risk neutral probabilities to risk natural probabilities. The authors played down this concern by arguing that in the study of systemic risk we can focus on the valuation of the different contingent claims (which we know how to do) and not on probabilities of default.

The second source of concern has to do with implementation of CCA. Heaton pointed at a great deal of parameter choices that may affect the results. He also pointed out many valuations that we do not observe and that we may need to implement CCA.

Heaton highlighted that a positive characteristic of CCA is that it strives to use information in various assets and markets to gather information about the economy and systemic risk.

Hui Chen pointed at three challenges for CCA to study systemic risk. The first problem is related to implementation. As an example, measurement of the required variables is a key issue for many sectors. Also, there may be a great degree of model dependence. It is not clear that aggregation of different risks is the best approach. It may be better to impose a common risk structure for the economy.

The second problem, which opens a possible path for future research, is how to capture the interconnections in the macroeconomy. For example, the credit risk of households depends on bank lending capacity. The CCA approach may not capture this and the challenge going forward is how to make the model operational by including these types of connections.

The third problem brought up by Chen is that the CCA approach relies on the assumptions used to price derivatives, particularly on market completeness, which certainly does not hold in several situations in the context of systemic risk as presented by the authors.

Regarding the policy-making applications of CCA, Lars Hansen pointed out that a natural concern is how to deal with endogeneity in the changes of risk exposures in response to policy interventions.

Anil Kashyap illustrated some of the challenges when applying CCA by bringing up the example of banks. Even though for banks, core deposits are a liability in accounting terms, research suggests that core deposits are actually a source of value. Hence, it is not always obvious what to use for assets and liabilities in CCA.

Christopher Sims pointed out that CCA is not a way to get answers, but a framework for showing a range of uncertainty, to quantify such uncertainty and learn where it is. The concerns about assumptions could be clarified by showing how sensitive the results are to those assumptions.

Finally, some avenues for future research were suggested during the discussion. Besides the above mentioned on interconnections between sectors, one could study how to implement the analysis in the household sector where data collection is a challenge, how to integrate financial systemic risk indicators from the CCA analysis with DSGE models, how to get the dynamics of systemic risk with this approach, and how to go from risk to systemic risk in this framework.

Presenters

- Dale Gray, Senior Risk Expert in the Monetary and Capital Markets Department of the IMF

- Hui Chen, Jon D. Gruber Career Development Professor in Finance, Massachusetts Institute of Technology, Sloan School of Management

- John C. Heaton, Joseph L. Gidwitz Professor of Finance, Booth School of Business

Presentation Materials

Friday, September 14, 2012

What’s Systematic Among Systemic Risk Measures?

Although there has been much recent academic, policymaker, and media attention paid to systemic risk, there is no single accepted definition. Different authors focus on volatility and instability in financial markets, co-movement among financial and other variables, contagion effects, and illiquidity. A few emphasize the adverse effects of these phenomena on macroeconomic outcomes.

Despite difficulties in defining what exactly we mean by systemic risk, previous research has proposed a wide variety of measures to capture it. Understanding the commonalities between these various measures will help understand what exactly they’re capturing, and, most importantly from a policy perspective, how it relates to macroeconomic outcomes.

The authors provide a quantitative analysis of a sample of the systemic risk measures surveyed by Bisias Flood Lo & Valavanis (2012). The vast majority of measures rely heavily on market prices, especially equity prices, because these data are widely available and relatively clean. Future work should focus on direct linkages, such as interbank loans or CDS (though the latter do not go back very far), stress tests, and Contingent Claims Analysis (CCA).

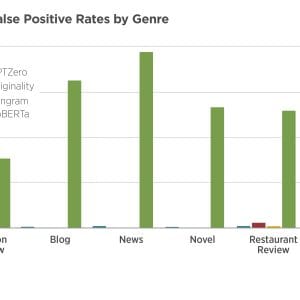

Consistent with the fact that most measures were proposed following the financial crisis, they all feature large jumps in the recent sample. Principal components analysis of the measures reveals that they are dominated by a volatility/correlation factor, though there does seem to be a strong credit spread/leverage component. Some measures, such as CoVar, Absorption, Turbulence, and the TED spread seem to Granger- cause the others.

In order to extract a common factor ideal for forecasting while retaining possible nonlinear effects, the authors propose a partial quantile regression approach, similar to the three-stage least squares method in recent work by Kelly & Pruitt (2012). This approach can flexibly model heterogeneity in the entire conditional distribution of macroeconomic outcomes that may be different from the behavior of the conditional mean. Relative to simple principal components analysis or straightforward multiple quantile regression, partial quantile regression seems to improve out-of-sample forecasts of the 20th percentile of all macroeconomic indicators except consumption.

An important issue with these exercises is the “joint hypothesis” problem: if we cannot use these measures to predict adverse macroeconomic outcomes, is it because systemic risk is not important, or because we’re not accurately measuring systemic risk? In addition, we only observe macroeconomic outcomes after policymakers have reacted. Is there a way to measure outcomes in the absence of such policy? Finally, how can we separate a “pure” systemic risk indicator from these measures, given what is not systemic risk in each of them is not just noise, but not systemic risk either?

Discussion

Ta-Chung Liu Distinguished Visitor Robert Engle noted that conditional quantiles are usually sensitive to volatility, relative to the conditional mean. Thus it is surprising that the measures correlated with volatility are not doing much in the quantile regression. This finding suggests that these measures are not doing what we think they are. Understanding the relationship between macroeconomic and financial market volatility, and how this relates to forecast errors, may be important in this regard.

Mark Flood questioned whether the focus on forecasting is appropriate. He cited the failure of Lehman Brothers, in which there was no need to forecast anything, but an intense analysis of systemic risk would have been valuable. Furthermore, understanding why some of these measures, such as CoVar, lit up the current crisis but not other financial crises, such as in 1987 or 1998.

Lars Hansen suggested mapping measures directly to the motivating quotes from the early part of the presentation, rather than attempting to reduce them all to a single dimension. Andrew Lo agreed, encouraging an analysis of multiple measures and their effects across various kinds of crises. On a similar note, Anil Kashyap proposed dividing measures into categories based on their theoretical mechanisms—for example, measures focused on credit supply and institutions, measures stemming from agency problems on the borrower’s side, or measures focusing on incomplete markets or fire sales—and averaging inside each category, rather than averaging over a heterogeneous set of measures that are all designed to capture something different.

Andrew Lo recommended adding commodity prices and exchange rates to the analysis, in order to reduce the dependence on equity prices without having to shorten the usable sample. He added that equities are not useful for measuring liquidity, and that hedge fund data might be a better choice. In terms of reducing the reliance on the recent crisis, he mentioned that Reinhart & Rogoff (2008) list specific dates of systemic events going back eight centuries. An event study analysis using their dataset might also shed light on what measures are appropriate.

Stijn van Nieuwerburgh and Lo both warned about look-ahead bias, especially when standardizing series or using data such as the Chicago Fed National Activity Index which are revised periodically. Van Nieuwerburgh also suggested using quantity rather than price data, such as aggregate interbank lending or surveys of loan officers, which might better capture informational asymmetries or issues in the banking sector. Mark Gertler agreed on this point, noting that the Fed’s liquidity programs during the crisis were largely aimed at reducing spreads, so that a focus on the quantity flows during these programs might contain additional information.

Christopher Sims noted that quantile regression can be inconsistent when estimating multiple quantiles simul- taneously, especially for out-of-sample forecasting or when the exogenous variables are realizing values far outside historical experience, because the predicted quantiles can cross.

Mark Gertler inquired about the sub-sample stability of these results, recalling that variables such as the term spread were better at forecasting recessions in the 1980s than they are today. He also observed that both this exercise and Simon Gilchrist’s papers have trouble forecasting consumption, perhaps because they do not separate between durables and non-durables consumption, which behave differently over the business cycle. In particular durable-goods consumption responds more strongly to the cycle, perhaps especially when the cycle is precipitated by financial factors.

Presenters

- Stefano Giglio, Professor of Finance, Yale School of Management

- Bryan Kelly, Professor of Finance at the Yale School of Management, a Research Fellow at the National Bureau of Economic Research

What Do We Learn From Macroeconomic Evidence?

Presentation by Frank Schorfheide

A recent criticism leveled at the discipline of economics was the inability of its models to help predict the financial crisis of 2007-2009. This presentation was about the forecasting performance of the popular dynamic stochastoc general equilibrium (DSGE) class of macroeconomic models. More specifically, the presentation focused on forecasting performance in a model with financial frictions, thereby linking the macroeconomy to the financial system explicitly.

The authors study the forecasting performance of three different versions of the Smets & Wouters (2007) DSGE model, by comparing them with an AR(2) model for different macroeconomic variables including output, consumption, investment, real wage growth, hours worked, inflation, and the Federal Funds Rate (FFR).

The first version of the model modifies Smets & Wouters (SW hereafter) to incorporate information from inflation expectations data. The second version adds financial frictions, and the third version includes data on the FFR and on current spreads (BBa bonds versus 10-year treasury rate) to improve the predictive power of the model.

Using data up to the first quarter of 2008, the authors find that all versions of the model fail to forecast the large drop in output growth and inflation after the bank failures of September 2008. However, using information from spreads and the FFR up to the third quarter of 2008 with the third version of the model, forecasting performance improves substantially, and the model is able to better match inflation and output growth behavior.

The authors also compare the model with professional forecasters and with an 11-variable VAR; they do not find evidence that the DSGE model performs any worse than these benchmarks.

Finally, the authors decompose the prediction of output growth to illustrate the importance of financial frictions during the crisis. They conclude that even though financial frictions play a role in the forecast, in terms of macro modeling they may be important only in bad economic times.

Discussion

Nobuhiro Kiyotaki highlighted that the DSGE model forecasts recessions from 2009 with current interest rate data but does not always perform well at forecasting in other cycles. This is in line with the conclusion of the authors that financial frictions need not be key for modeling other recessions. He acknowledges that it is hard to predict crisis but that the work of Schorfheide and del Negro also shows that at least once a crisis is developing we can use current data to predict outcomes.

Lars Hansen centered his discussion on some questions that future research should attempt to answer, given that the two papers are essentially published. He argued that DSGE models provide structure on shock accounting; in particular, during the financial crisis two types of shocks were important. One is an equity premium shock, which is important to understand why there is time variation in the equity premium; the other is a shock to the marginal efficiency of investment, which in the context of the financial crisis is related to financial constraints.

This opens some questions going forward. First, after decades of building proper micro foundations fro macroeconomic models, connecting macroeconomic models to microeconomic data is still a challenge. Second, a key question to the identification and estimation is to understand why these financial shocks are uncorrelated with other shocks, and how they are connected to financial frictions. This is an open question. Another question, more related to asset pricing, is which shock exposures command higher prices in the model and how they vary by investment horizons. Finally we need to keep advancing our understanding on how frictions operate. Currently some models tackle this by differentiating between the marginal investor and the average investor, while other models include a wedge between external and internal financing and give rise to multiple discount factors; still other models introduce economy-wide constraints, which sometimes bind and produce non-linearities.

Anil Kashyap and Mark Gertler raised two points during the group discussion. First, financial frictions are not primitive objects. Second, in terms of macroeconomic modeling, we have made progress in understanding and building models to see how a chain reaction starts once financial institutions are hit, but we have not made enough progress in understanding the origins of (the run-up to) the crisis.

Andrew Lo was concerned that when the economy is in normal times, financial frictions make the fit of the model worse, because it suggests that the way the financial frictions have been built into the SW model is not working.

Presentation by Mark Watson

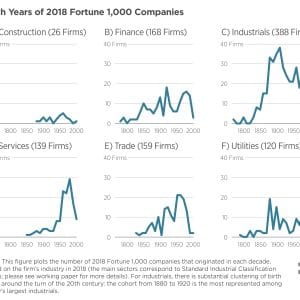

The authors seek to answer three important questions about the recession of 2007-2009, which had a major financial component. The first question is whether in terms of macroeconomic shocks the recession was different, or in other words, whether there were new factors hitting the economy that were not present in previous recessions. The second question is which shocks were important in the recession. Finally, they want to answer why the recovery has been sluggish in comparison to previous recessions.

To answer these questions the authors run a 6-factor vector autoregression model with 200 quarterly time-series from 1959 to the second quarter of 2011.

Regarding the first question, the authors find no evidence of new shocks hitting the economy, only that the shocks were larger in magnitude. Their interpretation is that the shocks affected the macro-variables in the same way as in the past, given the size of the shocks. They do not find any new factors affecting the economy, so that in this sense this recession is different from others only in its magnitude.

To address the second question, the authors run a structural VAR employing instruments used elsewhere in the literature to identify the shocks. They find that some of these shocks are highly correlated, and that during the recession there were a group of financial shocks (two uncertainty shocks and two term spread shocks) that were important and are able to account for the GDP growth behavior during this time period.

To answer the third question the authors decompose employment growth into its cyclical and trend component. They find the trend component to be quite important in employment, and interpret the results as indicating that what explains the slow recovery is not something unique to the shocks during the recession. The shocks (the cyclical component) are important, but the trend component is also very important. Therefore, the claim is that the slow trend in employment growth coming from demographic shifts (lower population growth and lower growth in female labor force participation) is a big force explaining slow recovery.

Discussion

Lars Hansen expressed concern about two findings in the paper. First, he asserts that the evidence on the shocks being larger with essentially the same transmission mechanism is weak, and therefore that we need to think more about this issue. Second, if the uncertainty shocks are shocks to the macroeconomy, one needs to put restrictions between these measures of uncertainty and the volatility of the model itself. In addition, if we want to think about how uncertainty alters behavior we need to think about non-linear channels, and the model in the paper is linear.

Nobuhiro Kiyotaki also pointed out that the conclusion that the shocks were the same, only bigger, is controversial. He questions whether these results actually show that the economy is fundamentally different than it was before the crisis, and therefore we cannot conclude that it is just that the shocks were the same.

Christopher Sims points out that in a structural VAR, when judging the accuracy of identification or the degree to which VARs are similar, we should not worry about the degree to which the structural shocks are correlated, but the degree to which the impulse responses are similar.

Presenters

- Frank Schorfheide, Presenter

- Mark W. Watson, Presenter

- Lars Peter Hansen, Discussant

- Nobuhiro Kiyotaki, Discussant

Presentation Materials

- Presentation Slides – Schorfheide

- Presentation Slides – Watson

- Discussion Slides – Hansen

- Discussion Slides – Kiyotaki

Updates on Alternative Measurement Approaches

Presenters

- Andrew W. Lo, Charles E. and Susan T. Harris Professor; Director, MIT Sloan School of Management; MIT Laboratory for Financial Engineering

- Doyne Farmer, Director of the Complexity Economics Program at the Institute for New Economic Thinking at the Oxford Martin School and the Baillie Gifford Professor in the Mathematical Institute at the University of Oxford and an External Professor at the Santa Fe Institute.

- Robert Engle, Michael Armellino Professor of Finance at New York University Stern School of Business

- Anil Kashyap, the Stevens Distinguished Service Professor of Economics and Finance at the University of Chicago Booth School of Business